Addictive apps gather data on children

Apps and games use manipulative tactics to keep kids online longer, and extract data about them.

Many apps and games use manipulative tactics to keep kids online longer, and extract data about them. Companies want kids’ identifying information so that it can be sold, used to track their interests, show them ads, and sell them products. High-tech “smart toys” can gather data on kids, too, and send that info to the manufacturer. Many of these apps and games are designed specifically to capture kids’ attention, and can use the same techniques as slot machines to keep kids glued to their screens – allowing for the collection of more data, and the serving of more ads. (This is not dissimilar from what apps and games try to do to, well, all of us.)

Most concerningly, right now, companies are free to design games and apps geared to children with the most manipulative techniques they can muster.

Features like autoplay and lock screen notifications to check platforms can contribute to longer screen time. Some platforms have default settings that make kids share more information and data about themselves than is necessary for the service they’re getting. And still others entice kids to spend large sums of money in exchange for in-game perks.

Spending more and more time looking at screens — like these apps are designed to encourage kids to do — can pose a risk to kids’ physical and mental health. Excess screen time can lead to eye problems, and to a lack of sleep during developmental periods when getting enough sleep is crucial. And rising anxiety and depression in minors have been linked to social media use.

There are lots of rules and laws written to protect kids in the offline world. We have laws to protect kids from health threats caused by lead paint and unsafe toys, and to enforce safety standards for things like car seats.

But even though kids are spending more and more time online to learn, socialize, and play, there are very few strong rules ensuring the web is a safe and healthy place for our youngest citizens.

California took an important step forward last year in passing the Age Appropriate Design Code Act, which CALPIRG supported. This law will require companies to consider the impacts their online services will have on kids before any child can log on.

Now, PIRG and other groups are calling on the FTC to take these ideas and turn them into a national rule, stopping companies from purposefully designing their products to manipulate kids with addictive apps.

Should I hit “accept” on cookie pop-ups?

Topics

Authors

R.J. Cross

Director, Don't Sell My Data Campaign, PIRG

R.J. focuses on data privacy issues and the commercialization of personal data in the digital age. Her work ranges from consumer harms like scams and data breaches, to manipulative targeted advertising, to keeping kids safe online. In her work at Frontier Group, she has authored research reports on government transparency, predatory auto lending and consumer debt. Her work has appeared in WIRED magazine, CBS Mornings and USA Today, among other outlets. When she’s not protecting the public interest, she is an avid reader, fiction writer and birder.

Find Out More

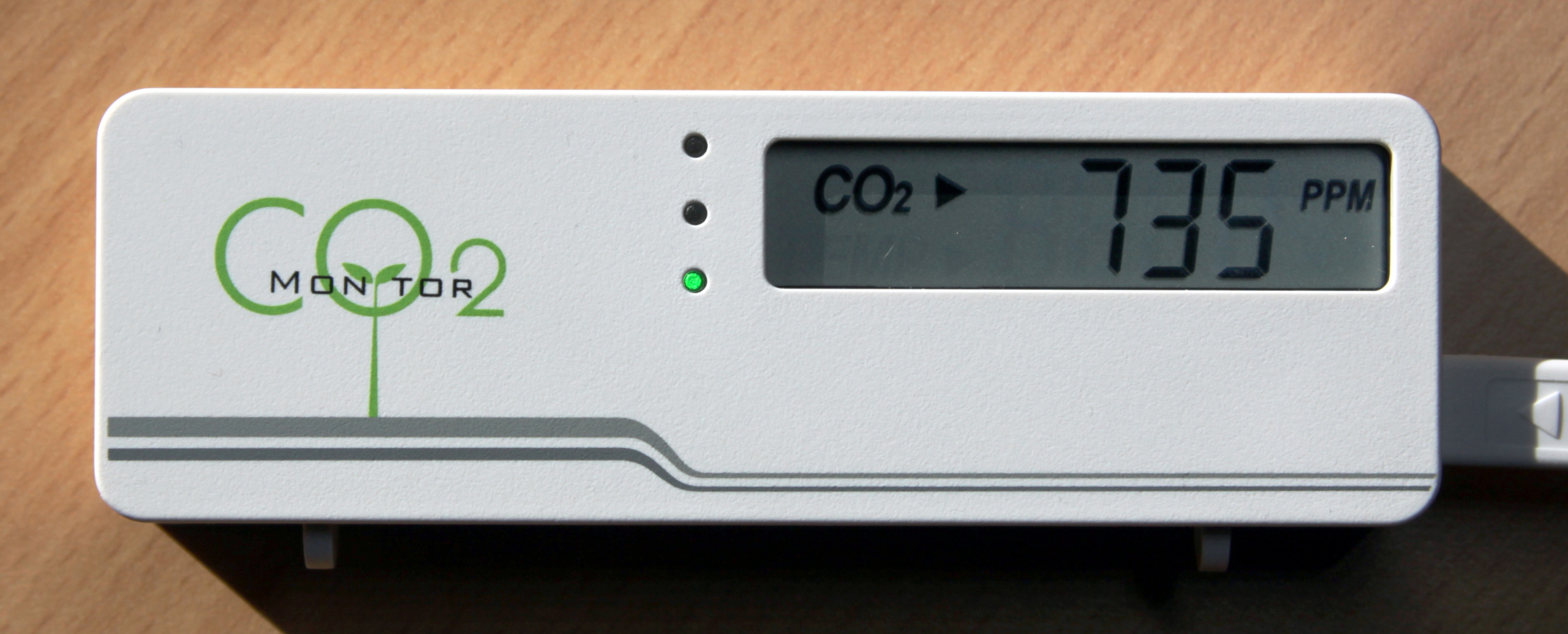

School air monitoring: A win for public health

Back to school on an electric bus

I deleted my Instagram as a teenager – here’s why